Overview

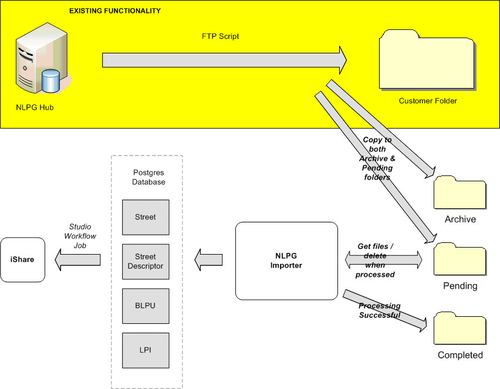

The NLPG Importer allows for the importing of NLPG supplied files in DTF 7.3 format which may then be used in iShare as an address lookup. As iShare will only be performing an address lookup (with property easting and northing) we only need to process the STREET (11), STREET DESCRIPTOR (15), BLPU (21) and LPI (24) records.

As well as importing new files supplied by the NLPG, either Full or COU, the NLPG Importer has a “Refresh all / Reset” function where the whole history is loaded in and the scripts work off the age of the files.

When the NLPG Importer runs a log file will be written to the Logs sub folder where the Python script resides e.g. C:\Python\NLPG Importer\Logs.

Installation & Configuration

Pre-Requisites

The NLPG Importer requires PyGreSQL, the PostgreSQL module for Python, which may be installed from the following location http://www.pygresql.org/. PyGreSQL is an open-source Python module that interfaces to a PostgreSQL database.

Install & Configure Settings

First install and configure the NLPG Importer by doing the following:

- Step 1 - Extract the contents of the supplied zip file & create the folders for the NLPG Importer

- Step 2 - Create the database and add the database scripts

- Step 3 - Configure Settings

Create Job & Tasks in Studio

Now that you have created and configured the database ready for receiving the NLPG data you will need to do the following:

You can create one more additional Job and Task which you would only run if you needed to rebuild the NLPG database from scratch:

The Import Process

In order to import your NLPG data into Data Share and keep it up-to-date you will need to do the following:

Process the NLPG data files

Files received from the NLPG hub will be copied, by the customer, into a processing folder (Pending) and an archive folder (Archive). The importer will then process each file in order of datestamp (oldest first). Once a file has been successfully processed it will be placed into a Completed folder.

The NLPG Importer will iterate through the file line by line and will copy the contents of the line into one of four separate csv files.

This will be done for the following record types:

REC_ID | Description |

|---|---|

11 | Street |

15 | Street Descriptor |

21 | BLPU |

24 | LPI |

Once the file processing has finished, the importer will then attempt to copy the files into their respective tables using the Postgres 'COPY INTO' command. Each imported row will be given its own sequence number column in the database table. There will be four tables created, one for each of the record identifiers above.

Import them into Data Share

A View of the latest data will be created in the NLPG Importer database for each of the four tables.

The view for each table will be created by selecting the records with the highest sequence number per Unique Identifier. If the change type is deleted, then the record will not be included in the view.

The Unique Identifiers for each record type are as follows:-

Record Type | Unique Identifier Field(s) |

|---|---|

Street (11) | USRN |

Street Descriptor (15) | USRN+LANGUAGE |

BLPU (21) | UPRN |

LPI (24) | LPI_KEY |

A Data Share connection to each of the four views in the NLPG Postgres database will need to be created in iShare Studio.

Automate the NLPG Import process

In order to automate the NLPG Import process a Task will need to be created in the iShare Studio Workflow Manager to run the NLPG Importer and then a Workflow Job created to perform the following tasks:

- Run the NLPG Importer

- Download the NLPG data to Data Share

- Rebuild the Address lookup table using PostgreSQL stored procedures

Now you can schedule the Job to run at a particular time.

Re-Importing the NLPG Data

If there is a problem with the import, it will be possible to restart the import again, starting from the first file received using the Archive folder. The process will be as follows:-

- All records are deleted from each of the four tables

- All files in the Pending folder will be deleted

- All files are copied from the Archive folder to the Pending folder

The process will then continue as normal until all files have been processed.